function call revectorization

function call revectorization

// CUDA nested kernel

__device__ void memcpy(int *src, int *dest, int length) {

for (int i = 0; i < length; i += blockDim.x) {

int idx = i + threadIdx.x;

dest[idx] = src[idx];

}

}

// CUDA kernel

__global__ void foo(int **srcs, int **dests, int *lengths) {

...;

int idx = threadIdx.x;

if (some_property(idx)) {

memcpy<<<32, 1>>>(srcs[idx], dests[idx], lengths[idx]);

}

...;

}

int main(void) {

...;

// kernel launch

foo<<<32, 1>>>(srcs, dests, lengths);

}

The special syntax conveys the allocation of basic resources for executing

the new kernel, as well as its scheduling. It is worth launching nested

kernels in CUDA whenever the workload for the upcoming threads is large enough.

Otherwise, if only a couple of iterations will take place, the overhead

from resource allocation and scheduling may exceed the performance improvements

of activating all threads to work on closer memory locations.

// SIMD function: should only be called under uniform control flow

void simd(argument arg) {

parallel for (thread_id in warp):

process data arg[thread_id]

}

// SPMD kernel: regular SPMD function with potential control flow divergences

void spmd(void) {

if (thread_id should be active):

crev simd(arguments)

}

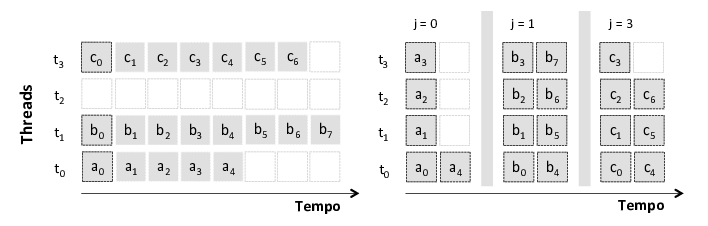

We propose a new SPMD idiom, crev, that allows SIMD functions to be

called within divergent regions. The approach is similar to a nested-kernel

call in CUDA, except it does not require extra allocation and scheduling:

the cost is equivalent to a regular function call.

// Optimized version of function "spmd" with thread re-enabling

void optimized_spmd(void) {

if (thread_id should be active):

everywhere: re-enable threads within this block {

for each thread_id formerly active:

simd(arguments for thread_id)

}

}

The code above shows an optimized version, which is the actual outcome

of applying our crev directive.

The simplicity of our approach, in comparison to the optimized version,

encourages the usage of irregular structures (linked lists)

over regular ones (matrices): crev relieves the complexity of changing

the dimension of parallelism.

// SPMD memory copy

void mem_cpy_par(uniform int8 * varying dest, const uniform int8 * varying src,

const varying int n) {

for (varying int i = 0; i < n; ++i) dest[i] = src[i];

}

// SIMD memory copy

void mem_cpy_simd(uniform int8 * uniform dest, const uniform int8 * uniform src,

const uniform int n) {

foreach (i = 0 ... n) dest[i] = src[i];

}

// Copies only pages containing the input pattern

export void bookfilter_par(const String * uniform page,

const uniform int num_pages, const uniform String& pattern,

String * uniform output) {

foreach (i = 0 ... num_pages) {

bool match = false;

str_match_par(page[i].data, page[i].length, pattern, match);

if (match != false) {

mem_cpy_par(output[i].data, page[i].data, page[i].length);

output[i].length = page[i].length;

}

}

}

The code above performs the copy of up to warp-size page[i] in parallel,

as from line 22. This means that only threads whose page matches the pattern

will be active during the call to mem_cpy_par.

Now, by simply replacing line 22 by the call to mem_cpy_simd

using crev:

crev mem_cpy_simd(output[i].data, page[i].data, page[i].length);

We can temporarily change the dimension of parallelism: we re-enable

all threads from the warp to work on the copy of the pages selected.

Check out the performance of our approach in comparison to other

possible implementations.

struct String {

uniform int length;

int8 * uniform data;

};

// Matches the pattern with the input text from the given offset

void pattern_match(uniform String& text, uniform String& pattern,

uniform int offset) {

varying bool match = true;

foreach (i = 1 ... pattern.length) {

if (pattern.data[i] != text.data[i + offset]) match &= false;

}

if (all(match)) print("match!\n");

}

// Finds the given pattern along the input text

export void String_match(uniform String& text, uniform String& pattern,

int * uniform matches, uniform int& num_matches) {

// For each character in the text

for (uniform int i = 0; i < text.length; i += programCount) {

// If such character matches the initial character from the pattern

// and we still got room for the whole pattern in the input string

varying int pos = i + programIndex;

if (text.data[pos] == pattern.data[0]

&& (pos + pattern.length) <= text.length) {

// Activate all threads to help decide whether there is a match

crev pattern_match(text, pattern, pos);

}

}

}

Check out the performance of our approach in comparison to other

possible implementations.

...Some more text... Check out the performance of our approach in comparison to other possible implementations.